4 Essential LLM Model Tools to Find PMF with Your AI SaaS

Discover four essential LLM model tools—Phospho, Helicone, Ollama, and LangChain—designed to help AI SaaS businesses achieve product-market fit. Compare their key features and find the best fit for refining and scaling your models with effective text analytics capabilities.

Exciting news, phospho is now bringing brains to robots!

With phosphobot, you can control robots, collect data, fine-tune robotics AI models, and deploy them in real-time.

Check it out here: robots.phospho.ai.

If you’re been in the startup game for a while, you probably already know that achieving product-market fit (PMF) is the ultimate goal of any AI SaaS business. This important milestone shows the world that your product is effectively meeting the needs of your customers and working as it should.

Despite its importance, product-market fit can be hard to reach and maintain – and failing to achieve product-market fit is the number one reason why tech startups fail.

This is frustrating, as often tech startups are only one small pivot away from finding their ideal market and use cases, which is where specialized LLM model tools come in.

The right LLM tool can help you refine, scale and monitor your AI models in real-time to make sure they align with customer needs. ultimately getting you to PMF faster and without so many different iterations.

In this article, we’ll compare four essential LLM model tools – Phospho, Helicone, Ollama and LangChain – and demonstrate how the unique features of each can help you achieve product-market fit.

We’ll be looking at the tools’ main features, real-world use cases and the unique value they can bring to your AI models.

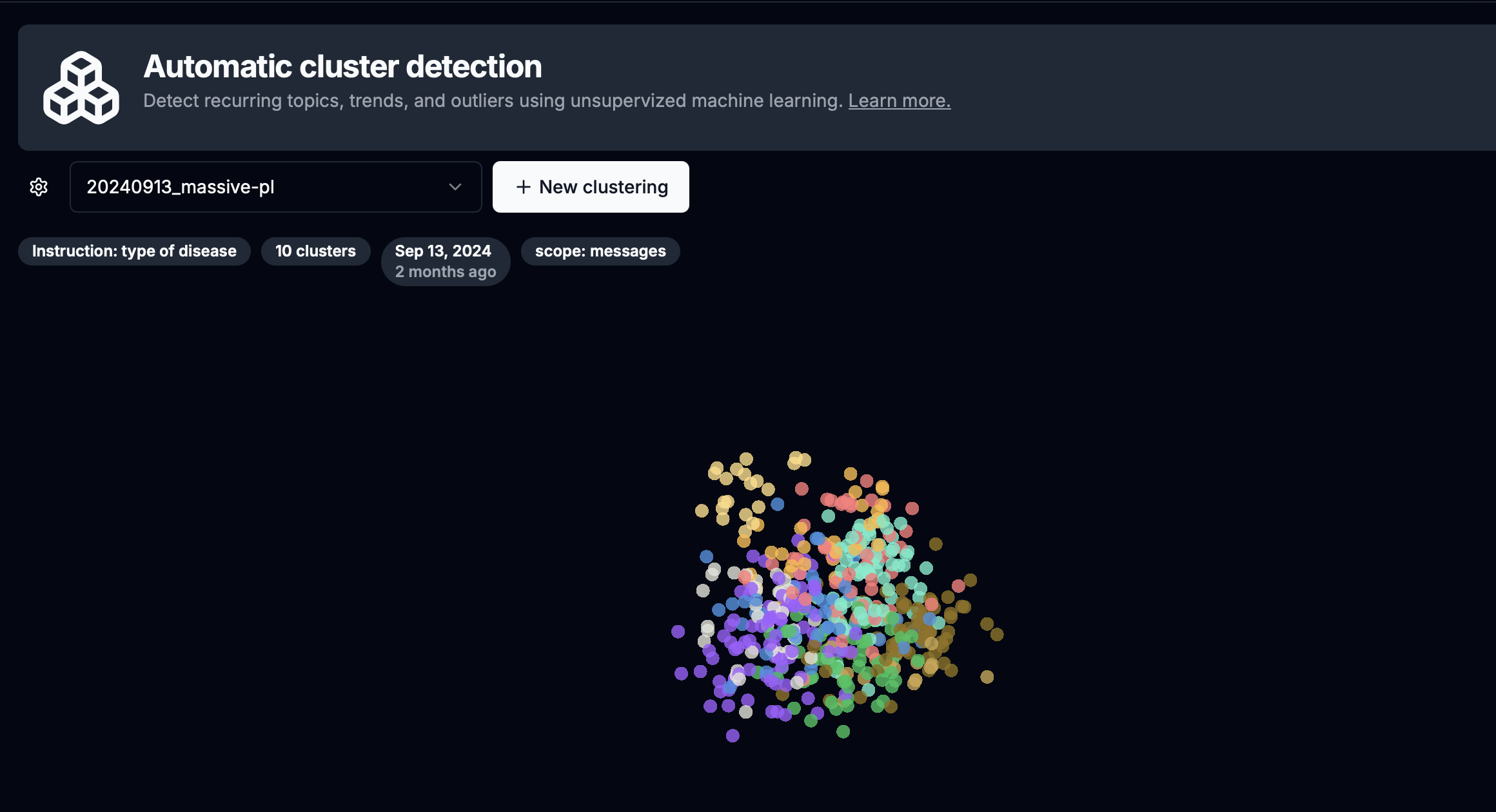

Phospho – The Advanced Text Analytics Platform

Phospho is an open-source text analytics platform that provides data insights from user interactions to help LLM apps improve and find their place in the market.

The platform was designed to provide real-time monitoring and evaluation of LLM applications, so it could help you discover outliers and misuse of your product, identify power users and reduce churn, creating an overall better product.

Phospho’s Key Features

Real-Time Monitoring

With real-time text analytics and monitoring, Phospho lets you observe and log user inputs and outputs, helping you make data-informed decisions.

This means you can perform a live temperature check to see how users engage with your app, allowing you to respond to feedback quickly.

Easy Integrations

Connect Phospho to existing tools like JavaScript, Python and CSV to make data handling a breeze.

Identify Issues

With Phospho, you can quickly identify and analyze edge cases, allowing you to handle unexpected scenarios and strange user behavior.

Customizable Goals

Set personalized KPIs to track your model’s performance and generate tailored insights that reflect your business goals.

Easy Iteration

Thanks to Phospho’s automatic evaluation pipelines, you can easily measure model performance and continuously improve your product.

Benefits

One of the main benefits of the platform is its fast feedback loops. This feature could empower your team to respond to user behavior quickly and efficiently, so you can refine your model based on real-time data and make it even more effective.

You can also test your models quickly and easily to check which parts are working best for your customers or users.

Phospho also supports tailored metrics, making it easy to set business goals and objectives – a crucial step on your journey to achieving PMF.

Use Case

Phospho could be used to monitor and evaluate a customer support Chatbot.

The platform would log conversations, detect frequent users and be able to frequently adjust its responses accordingly – improving customer satisfaction and helping bring the product closer to PMF.

Finding Product Market Fit

Phospho’s real-time analytics and evaluation capabilities make it a great option for AI SaaS companies looking to iterate quickly and accelerate product-market fit. The platform will help you continually improve and get closer to aligning your product with user needs – a core requirement for PMF.

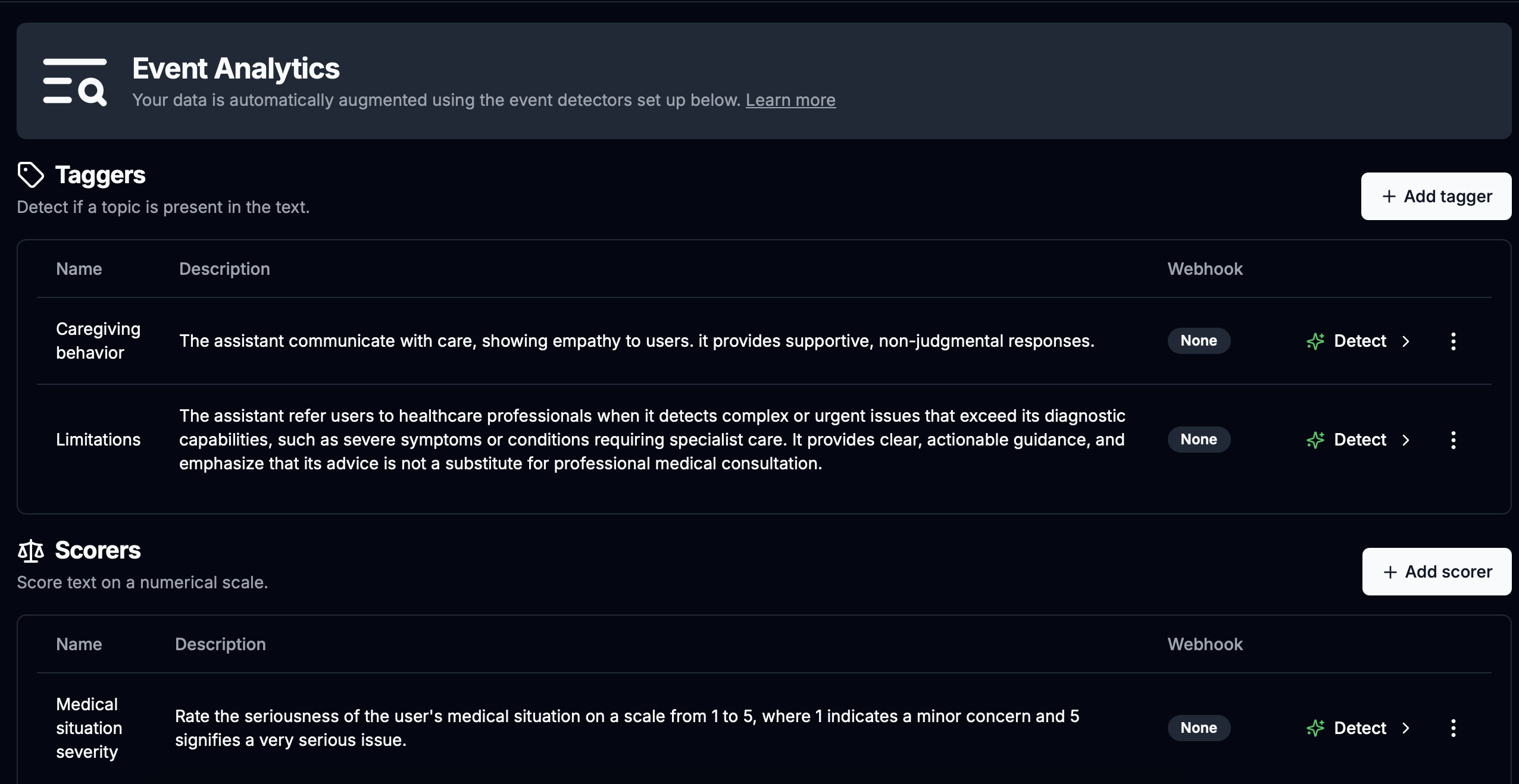

Helicone – The LLM Monitoring and Cost Management Platform

Helicone is a platform designed to monitor LLMs. It specializes in tracking performance, usage and cost for SaaS startups.

Helicone’s Key Features

Performance Monitoring

Helicone tracks latency, response times and user errors, helping your team understand key issues and improve your model’s performance.

Cost Tracking

One of Helicone’s core features is its usage and cost-tracking function. This feature provides detailed insights into API usage and the costs associated with your LLM operation, so it can help you closely monitor your finances and stay on budget while you’re working to achieve PMF.

Key Insights

Helicone provides user and session-level insights, allowing granular tracking of all user interactions. This will help your team understand key user behavior patterns and iterate, helping you optimize your product and get closer to PMF.

Alerts for Key Metrics

With customizable alerts for key metrics, you can remain informed the minute performance or cost issues arise.

Easy to use

Helicone offers a simple dashboard interface, making it easy for even non-technical team members to use.

Benefits

One of the main benefits of Helicone is that it helps AI SaaS companies manage their operational costs as they scale. It also provides valuable performance data, which means you can troubleshoot faster and work towards creating a better user experience.

Helicone supports cross-functional use, meaning you can bring non-technical team members on board (such as marketing or sales managers) to understand how your AI model is being used in the real world.

Use Case

A fintech SaaS company could use Helicone to monitor user sessions and identify peak usage time. These insights would help the company to optimize the model’s performance while scaling its resources.

Finding Product Market Fit

Helicone’s usage insights and cost transparency could be invaluable for SaaS businesses that want to reach PMF quickly while still hitting their financial and business goals.

Ollama – The Deployment and Model Hosting Platform

Ollama is a deployment and model hosting platform for LLMs that provides a user-friendly environment for model training and deployment.

Ollama’s Key Features

Easy Updates

Ollama’s easy update feature allows for quick redeployment, which is essential for iterative AI development.

LLM Model Hosting and Deployment

The platform offers a streamlined approach to deploying LLMs, mitigating the need for extensive infrastructure.

Compliance and Privacy

Ollama supports secure data handling, catering to SaaS companies that prioritize compliance and data privacy regulations.

Version Control

The version control feature means teams can easily maintain and test multiple versions of an AI model, making it easy to A/B test and update.

User-Friendly

Ollama is designed to be accessible for both technical and non-technical users, making it easy for the whole team to manage AI models.

Benefits

Ollama simplifies the technical aspects of model deployment, freeing up vital team resources so developers can focus on PMF.

The platform also supports rapid iteration and testing, which helps AI SaaS companies adapt their models quickly to better align with user needs. Its compliance-focused features also help SaaS companies operate in highly regulated industries, like finance.

Use Case

An e-commerce SaaS company could leverage Ollama to deploy a recommendation engine. This would allow the team to iterate fast based on real-time customer feedback and optimize conversion rates.

Finding Product Market Fit

Ollama’s simplicity and version control features would help SaaS companies deploy quickly while testing their AI features, getting them closer to PMF.

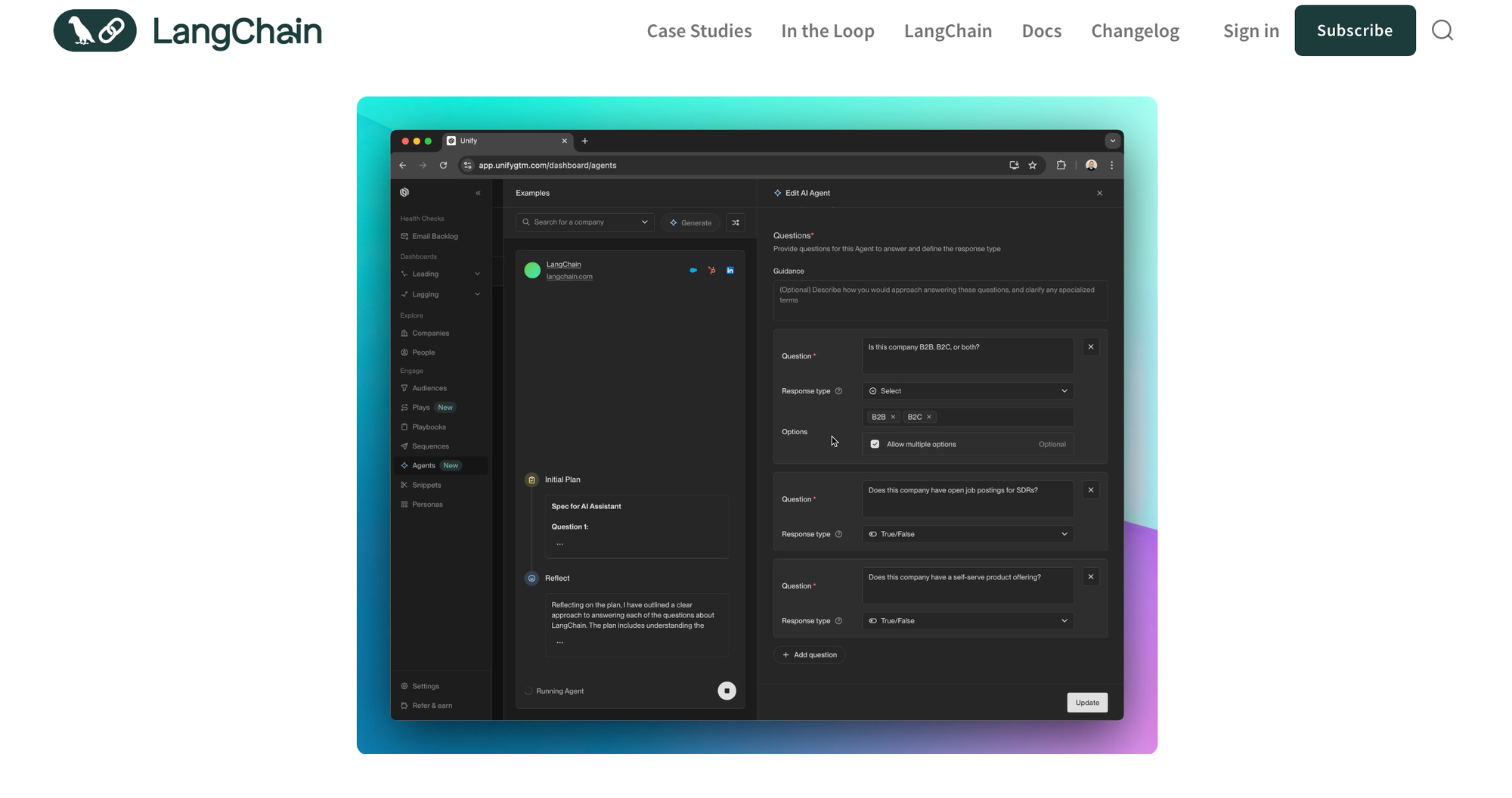

LangChain – Helping You Build an LLM

LangChain is a tool for building applications powered by LLMs. The platform specializes in dynamic workflows and chain-based model interactions.

LangChain’s Key Features

Memory Management

LangChain can retain context across sessions, resulting in much more responsive and coherent AI applications.

Tool Integration

The platform easily integrates with other APIs and custom tools, saving teams time and resources when optimizing their LLM application.

Interactive Testing

LangChain provides an interactive testing environment for developers who want to experiment with workflows before deploying.

Chain-Based Workflows

This feature allows users to build complex applications by “chaining” different models or processes together.

Easy LLM Integrations

LangChain easily integrates with LLMs, meaning it can be adapted to different industries and technologies.

Benefits

LangChain facilitates the creation of dynamic and complex applications that respond quickly to user needs, helping AI SaaS companies reach PMF swiftly.

The tool can enhance user experience with memory management, which results in a much more personalized and engaging product.

Compared to other LLM model tools, LangChain can reduce development time substantially due to its seamless integration with other tools, making it easier for teams to adapt and iterate.

Use Case

A knowledge management SaaS could use LangChain to build an engaging search tool that retrieves, analyses and summarizes documents based on user queries.

The tool’s memory management function would help create a more personalized product, resulting in higher customer satisfaction and retention.

Finding Product Market Fit

LangChain’s ability to build complex, responsive applications would help SaaS companies to adapt to their user’s needs and preferences, helping them create more tailored experiences and ultimately moving them closer to product-market fit.

Choosing the Right LLM Tool for Your Needs

Each of these tools supports AI SaaS companies in their journey to PMF in different ways. However, Phospho’s real-time text analytics and evaluation features make it the standout tool for companies who want to continuously refine their models and achieve product-market fit quickly, creating a product that users will love.

Want to take AI to the next level?

At Phospho, we give brains to robots. We let you power any robot with advanced AI – control, collect data, fine-tune, and deploy seamlessly.

New to robotics? Start with our dev kit.

👉 Explore at robots.phospho.ai.