How to add AI analytics to a Langchain agent?

Exciting news, phospho is now bringing brains to robots!

With phosphobot, you can control robots, collect data, fine-tune robotics AI models, and deploy them in real-time.

Check it out here: robots.phospho.ai.

Building an AI agent with Langchain is an exciting endeavor. In a few lines of Python, you have the prototype of an agent. You can chat with your data!

But like any cutting-edge technology, it comes with its own set of challenges. Hallucinations, incorrect responses, and misunderstandings can plague your Langchain-based agent.

What’s the first step to overcome these hurdles? Analytics.

Understanding the Analytics Spectrum

In software, analytics spans from performance metrics to user satisfaction insights.

- Performance Analytics: Dive deep into code execution details to catch bugs and optimize performance. Is everything working as intended?

- Product Analytics: Assess whether your agent meets the expectations of end-users. Am I making my users happy?

To create exceptional software, it's crucial to balance both types of analytics.

What Analytics Does Your Agent Need?

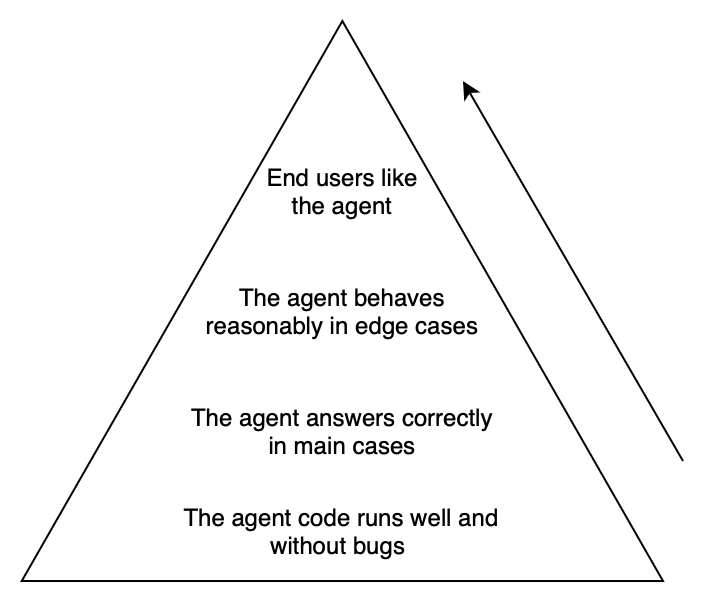

For Langchain agents, you want to make sure that :

- The agent code runs well and without bug.

- The agent answers correctly in main cases.

- The agent behaves reasonably in edge cases.

End users like the agent.

The ideal approach is to progress systematically:

- Start with basic metrics like execution speed and error rate.

- Develop tailored tests for the agent's behavior in main and edge cases.

- Collect user feedback post-implementation.

User feedback is, by far, the most valuable thing you can get to improve an agent. But it can be hard to collect properly.

Langchain agent code

Assuming you have a basic Langchain agent for Doc Q&A, let's explore how to integrate analytics. For example, you code may look like that :

from langchain.prompts import ChatPromptTemplate

from langchain_community.chat_models import ChatOpenAI

from langchain_community.embeddings import OpenAIEmbeddings

from langchain_community.vectorstores import FAISS

from langchain_core.output_parsers import StrOutputParser

from langchain_core.runnables import RunnablePassthrough

vectorstore = FAISS.from_texts(

[

"Phospho is the LLM analytics platform",

"Paris is the capital of Fashion (sorry not sorry London)",

"The Concorde had a maximum cruising speed of 2,179 km (1,354 miles) per hour.",

],

embedding=OpenAIEmbeddings(),

)

retriever = vectorstore.as_retriever()

template = """Answer the question based only on the following context:

{context}

Question: {question}

"""

prompt = ChatPromptTemplate.from_template(template)

model = ChatOpenAI()

retrieval_chain = (

{"context": retriever, "question": RunnablePassthrough()}

| prompt

| model

| StrOutputParser()

)

To run the chain, you invoke it this way.

question = "What is the speed of the Concorde?"

response = retrieval_chain.invoke(question)

The speed of the Concorde is 2,179km per hour.

Let’s add analytics to this chain.

Adding analytics to a Langchain agent

There are multiple ways to add analytics to a Langchain agent.

The basics is that you log the agent’s inputs and outputs in a database, and then you compute metrics to get insights.

Doing this yourself can work for simple cases, but gets annoying quickly.

There are many analytics providers. They differ on :

- The amount of setup required

- The analytics level (performance analytics vs. product analytics)

- The broadness of insights

Integrated analytics (Langsmith)

You may have heard of Langsmith, the analytics platform created by Langchain to help debug your agent, with technical insights about execution speed and step by step decomposition.

Langchain is mainly focused on performance analytics and debugging. While this is important, it doesn’t give you the full picture.

Currently, Langsmith is in closed beta, which unfortunately means you cannot use Langsmith without an invitation.

Fortunately, alternatives exist.

Analytics with callbacks

A common way to add analytics is to add a callback when invoking the chain or the agent. Callbacks are functions called every time something happens.

The Langchain documentation contains some examples.

Let’s focus on the example of adding analytics with phospho, which is a product analytics platform for LLM apps.

- Install the phospho module

pip install --upgrade phospho

- Create an account on the phospho platform. Add your API key and project id as environment variables.

export PHOSPHO_API_KEY = "..."

export PHOSPHO_PROJECT_ID = "..."

- Add the callback when invoking your chain or your agent.

from phospho.integrations import PhosphoLangchainCallbackHandler

question = "What is the speed of the Concorde?"

response = retrieval_chain.invoke(

question,

# Add the callback in config

config={"callbacks": [

PhosphoLangchainCallbackHandler(), # Note: you can add multiple callbacks

]},

)

And that’s it! phospho will log the input, output, and intermediate steps of the chain.

phospho logging runs asynchronously and the heavy computations happen remotely, so the impact on the agent’s execution speed is minimal.

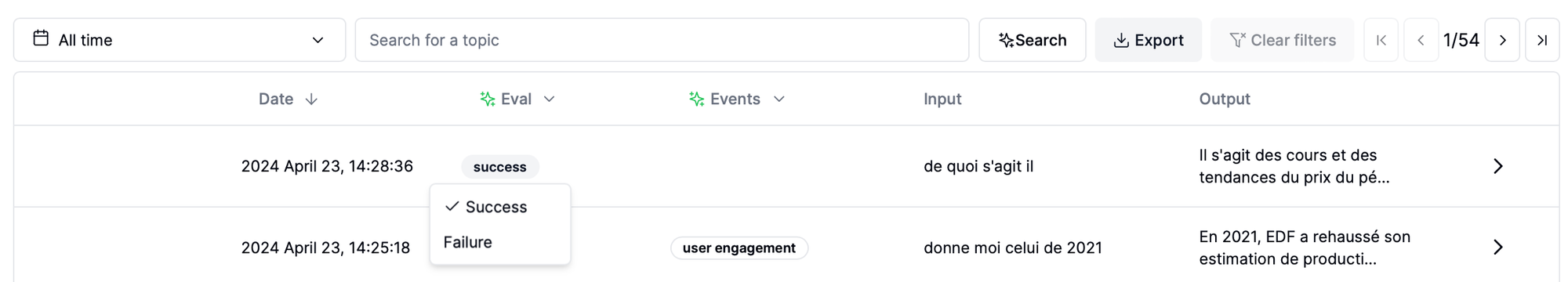

The chain input and output is logged to phospho in the “Tasks” tab.

To have more details about the log, click on View. In the raw task data, the intermediate steps of the chain and retrieved Documents are also logged to phospho.

How to improve the product?

phospho automatically labels the task as a success or a failure with default criterias. It can be improved with feedback given by you or by your users.

phospho also detects events. For example, here phospho detected that this interaction was a “question answering” event. Set up custom events that trigger webhooks when detected. For example, to receive a slack message when a user discuss a certain topic.

By monitoring the success rate, monitoring different versions, and running tests, you can get a feeling on what really matters for your users.

Custom callback

Fore more advanced integration with langchain, customize the phospho callback passed to the chain.

from phospho.integrations import PhosphoLangchainCallbackHandler

class MyCustomLangchainCallbackHandler(PhosphoLangchainCallbackHandler):

# The full list of callbacks is available here:

# <https://python.langchain.com/docs/modules/callbacks/>

def on_agent_finish(self, finish: AgentFinish, **kwargs: Any) -> Any:

"""Run on agent end."""

# Do something custom here

self.phospho.log(input="...", output="...")

question = "What is the speed of the Concorde?"

response = retrieval_chain.invoke(

question,

config={"callbacks": [

MyCustomLangchainCallbackHandler(),

]},

)

Conclusion

In conclusion, enhancing the performance and user satisfaction of your Langchain agent involves adding analytics into your development process.

By strategically addressing both performance and product analytics, you can ensure that your agent not only runs smoothly but also delivers a positive experience to end users.

Want to take AI to the next level?

At Phospho, we give brains to robots. We let you power any robot with advanced AI – control, collect data, fine-tune, and deploy seamlessly.

New to robotics? Start with our dev kit.

👉 Explore at robots.phospho.ai.